Virtual address space

本文所描述的是理论的、逻辑的,在./Linux-implementation章节描述了Linux中是如何实现的。

理解进程的虚拟地址空间

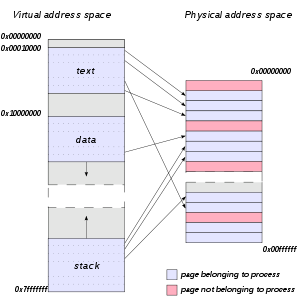

我们知道我们编写的**应用程序**最终要以**进程**的形式来运行,任何一个**进程**都被赋予其自己的**虚拟地址空间**,该**虚拟地址空间**覆盖了一个相当大的范围,对于32位操作系统,其进程进程的地址空间为2^{32}=4,294,967,296 Byte,这使得一个**指针**可以使用从0x00000000到0xFFFFFFFF的4GB范围之内的任何一个值。虽然每一个32位进程可使用**4GB**的**地址空间**,但并不意味着每一个进程**实际**拥有4GB的**物理地址空间**,该地址空间仅仅是一个**虚拟地址空间**,它是一个逻辑上的设计。此**虚拟地址空间**只是内存地址的一个范围。进程实际可以得到的**物理内存**要远小于其**虚拟地址空间**。进程的**虚拟地址空间**是为每个进程所私有的,在进程内运行的**线程**对**内存空间**的访问都被限制在**调用进程**之内,而不能访问属于其他进程的内存空间。这样,在不同的进程中可以使用相同地址的指针来指向属于各自调用进程的内容而不会由此引起混乱。

通过上面这段话的描述,可以知道进程使用的全部资源是’虚拟‘的,我们知道我们编写的**应用程序**最终要以**进程**的形式来运行,因而进程就相当于一个虚拟机(虚拟的计算机),所以我们很容易感受到自己编写的应用程序似乎使用的是所有的计算机资源,32bit的计算机我们进程似乎就是使用完整的4G内存,但是对系统稍有了解,我们就知道一个进程使用的物理内存实际可能不到几百m甚至几m!这就是一种虚拟,进程使用的是虚拟的全部计算机资源!回来再看内存,实际上使用几m的内存,但每个进程的虚拟内存却是4G!这就是虚拟内存的奥妙!

虚拟内存空间(virtual memory area,VMA),也称作线性区。虚拟存储器是一个抽象概念,它为每一个进程提供了一个假象,好像每个进程都在独占的使用主存。每个进程看到的存储器都是一致的,称之为虚拟地址空间。

wikipedia Memory address # Contents of each memory location

See also: binary data

Each memory location in a stored-program computer holds a binary number or decimal number of some sort. Its interpretation, as data of some data type or as an instruction, and use are determined by the instructions which retrieve and manipulate it.

NOTE: 上面这段话,对interpretation model的总结是非常好的。

Address space in application programming

In modern multitasking environment, an application process usually has in its address space (or spaces) chunks of memory of following types:

1) Machine code, including:

- program's own code (historically known as code segment or text segment);

- shared libraries.

2) Data, including:

- initialized data (data segment);

- uninitialized (but allocated) variables;

- run-time stack;

- heap;

- shared memory and memory mapped files.

Some parts of address space may be not mapped at all.

NOTE: 上述的对VMA的分类是更加清晰的,它是符合"function and data model"的,它让我们从一个更高的角度来审视VMA。

wikipedia Virtual address space

In computing, a virtual address space (VAS) or address space is the set of ranges of virtual addresses that an operating system makes available to a process. The range of virtual addresses usually starts at a low address and can extend to the highest address allowed by the computer's instruction set architecture and supported by the operating system's pointer size implementation, which can be 4 bytes for 32-bit or 8 bytes for 64-bit OS versions. This provides several benefits, one of which is security through process isolation assuming each process is given a separate address space.

NOTE: process isolation是非常有必要的,因为当OS中运行多个process的时候,OS就需要进行调度,因此就有可能暂停某个process的执行而转去执行另外一个process;可能过来一些时间后,再resume之前暂停的process;可以看到,为了达到使process可中断,不同process之间的isolation非常重要,被中断的process的address space应当要免收其他的正在running的process的影响;

Example

When a new application on a 32-bit OS is executed, the process has a 4 GiB VAS: each one of the memory addresses (from 0 to 2^{32} − 1) in that space can have a single byte as a value. Initially, none of them have values ('-' represents no value). Using or setting values in such a VAS would cause a memory exception.

NOTE : 上述32-bit指的是OS的Word size

0 4 GiB

VAS |----------------------------------------------|

Then the application's executable file is mapped into the VAS. Addresses in the process VAS are mapped to bytes in the exe file. The OS manages the mapping:

0 4 GiB

VAS |---vvvvvvv------------------------------------|

mapping |-----|

file bytes app.exe

The v's are values from bytes in the mapped file. Then, required DLL files are mapped (this includes custom libraries as well as system ones such as kernel32.dll and user32.dll):

0 4 GiB

VAS |---vvvvvvv----vvvvvv---vvvv-------------------|

mapping ||||||| |||||| ||||

file bytes app.exe kernel user

The process then starts executing bytes in the exe file. However, the only way the process can use or set '-' values in its VAS is to ask the OS to map them to bytes from a file. A common way to use VAS memory in this way is to map it to the page file. The page file is a single file, but multiple distinct sets of contiguous bytes can be mapped into a VAS:

0 4 GiB

VAS |---vvvvvvv----vvvvvv---vvvv----vv---v----vvv--|

mapping ||||||| |||||| |||| || | |||

file bytes app.exe kernel user system_page_file

And different parts of the page file can map into the VAS of different processes:

0 4 GiB

VAS 1 |---vvvv-------vvvvvv---vvvv----vv---v----vvv--|

mapping |||| |||||| |||| || | |||

file bytes app1 app2 kernel user system_page_file

mapping |||| |||||| |||| || |

VAS 2 |--------vvvv--vvvvvv---vvvv-------vv---v------|

On Microsoft Windows 32-bit, by default, only 2 GiB are made available to processes for their own use.[2] The other 2 GiB are used by the operating system. On later 32-bit editions of Microsoft Windows it is possible to extend the user-mode virtual address space to 3 GiB while only 1 GiB is left for kernel-mode virtual address space by marking the programs as IMAGE_FILE_LARGE_ADDRESS_AWARE and enabling the /3GB switch in the boot.ini file.

On Microsoft Windows 64-bit, in a process running an executable that was linked with /LARGEADDRESSAWARE:NO, the operating system artificially limits the user mode portion of the process's virtual address space to 2 GiB. This applies to both 32- and 64-bit executables.[5][6] Processes running executables that were linked with the /LARGEADDRESSAWARE:YES option, which is the default for 64-bit Visual Studio 2010 and later, have access to more than 2 GiB of virtual address space: Up to 4 GiB for 32-bit executables, up to 8 TiB for 64-bit executables in Windows through Windows 8, and up to 128 TiB for 64-bit executables in Windows 8.1 and later.

Allocating memory via C's malloc establishes the page file as the backing store for any new virtual address space. However, a process can also explicitly map file bytes.

Linux

For x86 CPUs, Linux 32-bit allows splitting the user and kernel address ranges in different ways: 3G/1G user/kernel (default), 1G/3G user/kernel or 2G/2G user/kernel.

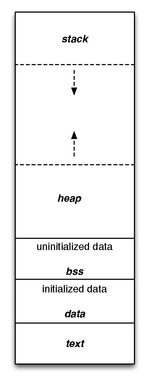

wikipedia Data segment # Program memory

A computer program memory can be largely categorized into two sections: read-only and read/write. This distinction grew from early systems holding their main program in read-only memory such as Mask ROM, PROM or EEPROM. As systems became more complex and programs were loaded from other media into RAM instead of executing from ROM, the idea that some portions of the program's memory should not be modified was retained. These became the .text and .rodata segments of the program, and the remainder which could be written to divided into a number of other segments for specific tasks.

This shows the typical layout of a simple computer's program memory with the text, various data, and stack and heap sections.

Text

Data

BSS

Heap

Stack

Memory layout of process with thread

前面介绍了每个thread都有自己的call stack,那它们的call stack的layout是如何的呢?本节对此进行讨论。

stackoverflow The memory layout of a multithreaded process

I just tested it with a short Python "program" in the interactive interpreter:

import threading

import time

def d(): time.sleep(120)

t = [threading.Thread(target=d) for _ in range(250)]

for i in t: i.start()

Then I pressed ^Z and looked at the appropriate /proc/.../maps file for this process.

It showed me

00048000-00049000 ---p 00000000 00:00 0

00049000-00848000 rw-p 00000000 00:00 0 [stack:28625]

00848000-00849000 ---p 00000000 00:00 0

00849000-01048000 rw-p 00000000 00:00 0 [stack:28624]

01048000-01049000 ---p 00000000 00:00 0

01049000-01848000 rw-p 00000000 00:00 0 [stack:28623]

01848000-01849000 ---p 00000000 00:00 0

01849000-02048000 rw-p 00000000 00:00 0 [stack:28622]

...

47700000-47701000 ---p 00000000 00:00 0

47701000-47f00000 rw-p 00000000 00:00 0 [stack:28483]

47f00000-47f01000 ---p 00000000 00:00 0

47f01000-48700000 rw-p 00000000 00:00 0 [stack:28482]

...

bd777000-bd778000 ---p 00000000 00:00 0

bd778000-bdf77000 rw-p 00000000 00:00 0 [stack:28638]

bdf77000-bdf78000 ---p 00000000 00:00 0

bdf78000-be777000 rw-p 00000000 00:00 0 [stack:28639]

be777000-be778000 ---p 00000000 00:00 0

be778000-bef77000 rw-p 00000000 00:00 0 [stack:28640]

bef77000-bef78000 ---p 00000000 00:00 0

bef78000-bf777000 rw-p 00000000 00:00 0 [stack:28641]

bf85c000-bf87d000 rw-p 00000000 00:00 0 [stack]

which shows what I already suspected: the stacks are allocated with a relative distance which is (hopefully) large enough.

The stacks have a relative distance of 8 MiB (this is the default value; it is possible to set it otherwise), and one page at the top is protected in order to detect a stack overflow.

The one at the bottom is the "main" stack; it can - in this example - grow until the next one is reached.