Use checksum to find duplicate file

主要是基于hash comparison

clonefileschecker Hashing Algorithms to Find Duplicates

NOTE:

1、作者提出的做法其实就是基于checksum来进行(hash) comparison,并没有太多的创新之处

Finding duplicate files is a hectic task when you have millions of files spread all over your computer. To check if two files are duplicates of each other, we should do a one-to-one check for the suspect files. Even though this is simple for smaller files, performing a one-to-one (byte-to-byte) comparison for large files is a massively time-consuming task.

Imagine comparing two gigantic files to check whether they are duplicates, the time and the effort you need to spare for undertaking such a task is probably enough to simply make you give up. An easy way to remove duplicates would be to utilize an all-purpose duplicate cleaner such as the Clone Files Checker. It works on the basis of highly advanced algorithms and saves your time in organizing files.

Let’s imagine that we are downloading a large file over the Internet and we need to make sure that the file was not modified by a third-party in the midst of the transmission, which may cause a man-in-the-middle attack. Checking each byte over the Internet would take years to accomplish and also eat up a significant portion of your Internet bandwidth.

So, how can we easily compare two files in such manner that is credible yet not at all laborious?

There are multiple ways to do so, including Hash Comparison which is turning out to be a highly popular and reliable.

NOTE:

1、主要是基于hash comparison

What is a hash?

Hash is a mathematical function which takes objects as inputs and produces as output a number or a string.

These input objects can vary from numbers and strings (text) to large byte streams (files or network data). The output is usually known as the hash of the input.

The main properties of a hash function are:

1、Easy to compute

2、Relatively small output

Example

We can define a hash function to get the hash of the numbers between 0 to 9999.

h(x) = x mod 100

In the above-mentioned hash function, you can see that there is a significant probability of getting the same hash (collision) for two different inputs. (i.e. 1 and 1001).

So, it is always good to have a better hash function with fewer collisions, which makes it difficult to find two inputs which give the same output.

While hashing is used in many applications such as hash-tables (data structure), compression and encryption, it is also used to generate checksums of files, network data and many other input types.

Checksum

The checksum is a small sized datum, generated by applying a hash function on a large chunk of data. This hash function should have a minimum rate of collisions such that the hashes for different inputs are almost unique. That means, getting the same hash for different inputs is nearly impossible in practice.

These checksums are used to verify if a segment of data has been modified. The checksum (from a known hash function) of received data can be compared with the checksum provided by the sender to verify the purity of the segment. That is how all the data is verified in TCP/IP protocols.

NOTE:

1、所谓"purity",其实是data integrity

In this way, if we generate two checksums for two files, we can declare that the two files aren’t duplicates if the checksums are different. If the checksums are equal, we can claim that the files are identical, considering the fact that getting the same hash for two different files is almost impossible.

NOTE:

1、上面这段话总结了作者的核心观点

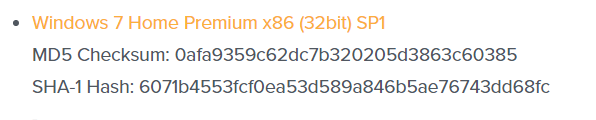

And many websites provide hashes of the files at the download pages, especially when the files are located on different servers. In such a scenario, the user can verify the authenticity of a file by comparing the provided hash with the one he generated using the downloaded file.

There are various hashing functions that are used to generate checksums. Here are some popular ones.

| Name | Output size |

|---|---|

| MD5 | 128bits |

| SHA-1 | 160bits |

| SHA-256 | 256bits |

| SHA-512 | 512bits |

MD5

MD5 is a popular hashing function which was initially used for cryptographic purposes. Even though this generates 128-bit output, many people no longer use it due to a host of vulnerabilities that later surfaced. However, it still can be used as a checksum to verify the authenticity of a file (or a data segment) to detect unintentional changes/corruptions.

SHA-1

SHA 1 (Secure Hash Algorithm 1) is a cryptographic hashing function which generates a 160-bit output. This is no longer considered as secure after many attacks, web browsers already have stopped accepting SLL Certificates based on SHA-1.

The later versions of SHA checksums (SHA-256 and SHA-512) are more secure as they incorporate vast improvements and hence don’t contain any vulnerabilities as oft he time this article was published.

Features of a strong hash function

- Should be open – Everybody should know how the hashing is performed

- Easy to generate – Should not take too much time to generate the output

- Fewer collisions – The probability of getting the same hash for two inputs should be near to zero

- Hard to back-trace – Given a hash, recovering the original input should not be possible

easyfilerenamer Hash MD5 Check – The Best Method to Find Duplicate Files

NOTE:

1、和上面文章是一样的思想

One of the biggest problems of every computer user is the piling up of junk in their system. This happens due to many factors, and the creation of duplicate files is one of them. Duplicate files decrease the performance of a system drastically. They aren’t created by the user intentionally, but nevertheless, they will clutter the hard drive and cause disorganization of data without the user even knowing about them.

Luckily enough, some genius people have worked endlessly to come up with unique state-of-the-art methods that make scanning for and getting rid of duplicate data of all kinds a very straightforward matter. So let’s jump into some detail of the mechanism that is employed to scan for duplicates and then we shall move on to a genius solution of its own kind. But let’s just introduce it to you before we go into more details. Clone Files Checker is its name, and its job is to go after those pesky duplicates on Windows as well as Mac systems!

Why Use MD5 Check to find Duplicate Files?

The history of MD5 starts from 1991 when the systems had already been upgraded to 128-bit data transfer rates and a security feature that could encrypt 128-bit data slot was very much needed. MD5 was initially used for cryptographic purposes only, but the discovery of several vulnerabilities meant it was put out of service soon after. However, the algorithm is still used to verify the authenticity of a file, and to know if it has been through an event of data corruption.

The benefits of MD5 check over other cryptic algorithms is that it can be implemented faster than the other cryptic algorithms and provides an impressive performance increase for verifying data in comparison with SHA1, SHA2 and SHA3 cryptic software.

However, MD5 is vulnerable to collision resistance. But, the good point is that in finding duplicate files, collision resistance is not really an issue. Collision resistance refers to two inputs providing the same outputs when hashed. But

A good MD5 hash program will work in unison with the file size, type, and the last byte value. This is because it is common for various parameters of some files to be similar/ identical/ different (size, name etc) but the hash value will always be the same (provided these files are duplicates). This absolutely does away with the chances of deleting a file which isn’t a duplicate while cleaning up duplicate data. Therefore, MD5 is a huge blessing while on the lookout for duplicates.

Now let’s take a brief look at a software that uses MD5 to weed out duplicates swiftly and accurately.

Clone Files Checker

This software is simply put, a death sentence for duplicate data. Be it duplicate data of any kind, located on your local computer, external hard drive or even your cloud account, this software will dive into great details and make exceptional use of MD5 Check to bring up a list of all the duplicate data that had eaten into your previous hard drive and was beginning to slow down your system.

From then onwards, it is pretty simple for you to choose between deleting the duplicates permanently or moving them to a separate folder. But it is all due to the goodness offered by MD5 Check and the genius brains behind Clone Files Checker that you can be assured to a swift and accurate scan that will help free up a big quantum of your hard drive.